0

COMPLETADO

The ability to feed RGB(a?) into the texture2d node aside from just a texture asset.

There are plenty of times where it would be great if I could resample RGB data after it has been modified. Changing a UV after the fact is very useful and can optimize many of my shaders.

Respuesta

Denegado

I'm not sure what you mean - you can't "change the UV after the fact", you have to sample it again in that case, which is what the Texture2D asset node is for!

+1

The only thing which can be put into the Tex slot in the Texture 2d node is a Texture Asset node. What I want to do is place other data into that slot aside from a texture asset node. In other words: I want to take the RGB data that was changed through other nodes (multiply and such) and plug that back into a texture2d node so I can change the uv coordinates AFTER it was already changed. So yes, I want to sample it again, but I cannot at the moment.

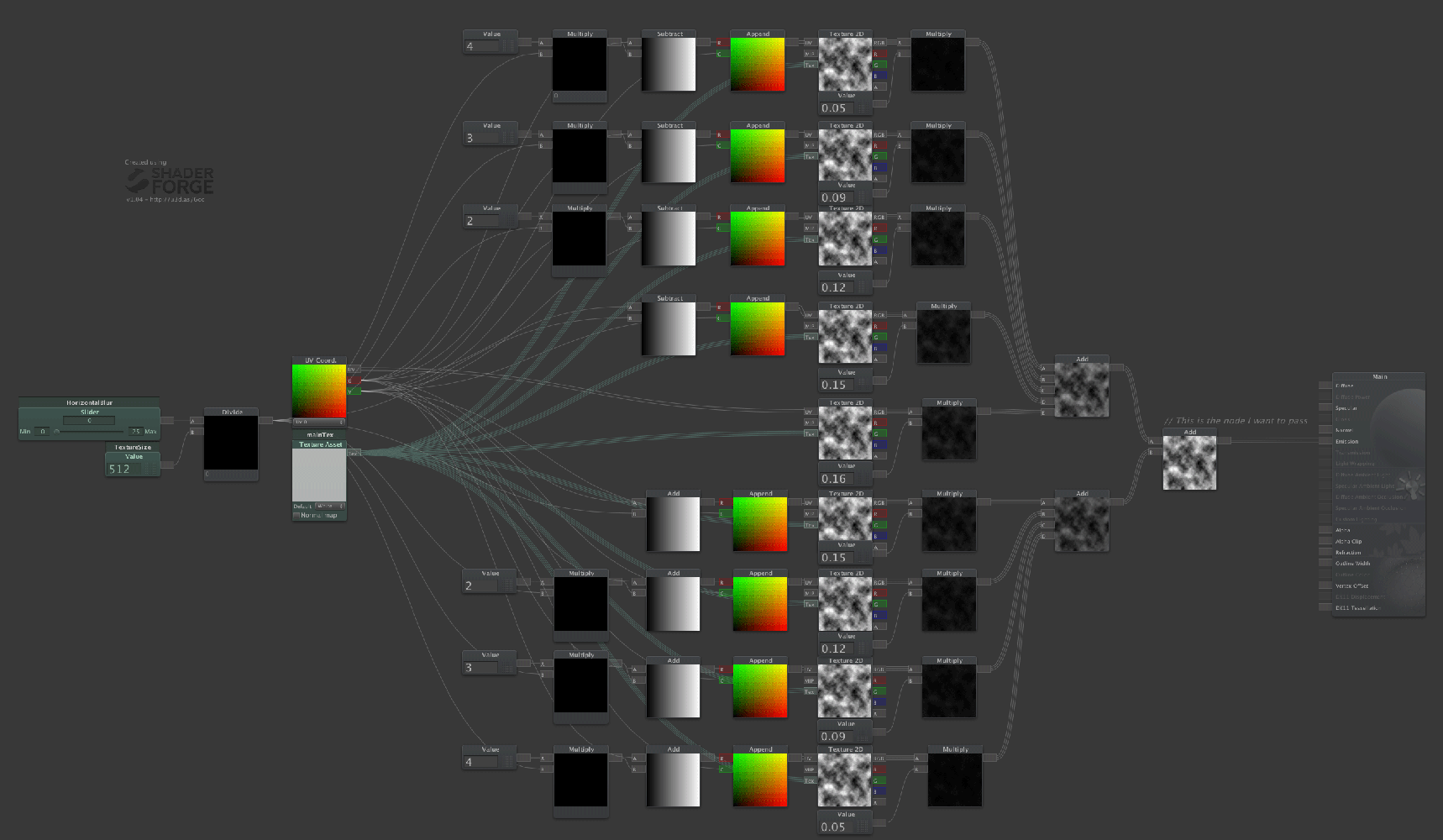

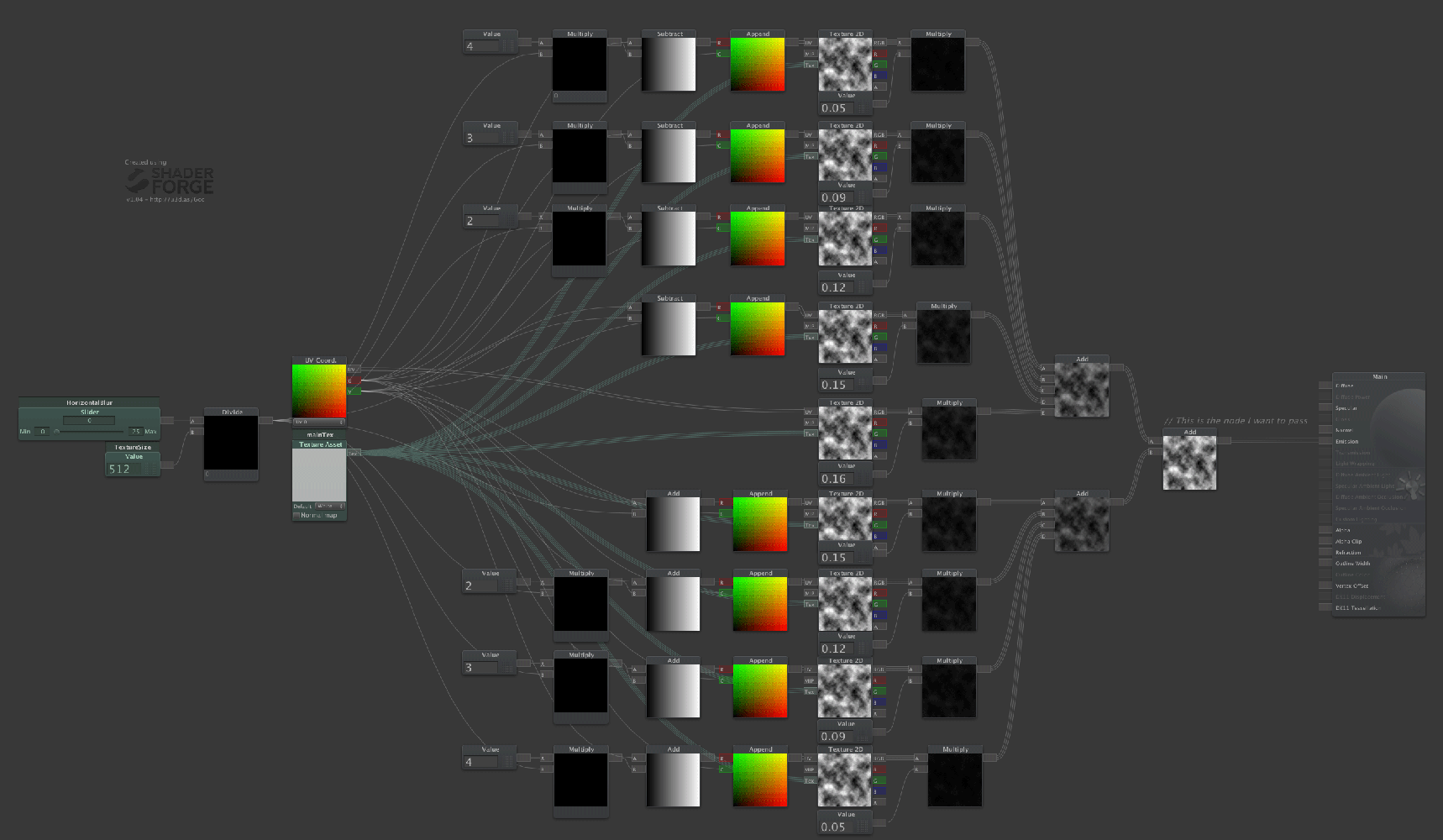

Here's an example of what I mean.

http://www.gamerendering.com/2008/10/11/gaussian-blur-filter-shader/

Here they take the scene color data, move it around on the horizontal axis, and then sum it all up. Then they take the sum result and do the same thing on the vertical. They are resampling modified data and not a raw asset. I would like shader forge to be able to do the same thing.

Here's an example of what I mean.

http://www.gamerendering.com/2008/10/11/gaussian-blur-filter-shader/

Here they take the scene color data, move it around on the horizontal axis, and then sum it all up. Then they take the sum result and do the same thing on the vertical. They are resampling modified data and not a raw asset. I would like shader forge to be able to do the same thing.

Respuesta

COMPLETADO

Which is exactly what you can do using the texture asset node :)

You *have* to use the Texture2D node multiple times, there's no way of "moving" a sampler other than sampling again at another location. Look at the page you linked:

sum += texture2D(RTScene, vec2(vTexCoord.x - 4.0*blurSize, vTexCoord.y)) * 0.05;

sum += texture2D(RTScene, vec2(vTexCoord.x - 3.0*blurSize, vTexCoord.y)) * 0.09;

sum += texture2D(RTScene, vec2(vTexCoord.x - 2.0*blurSize, vTexCoord.y)) * 0.12;

...

each Texture2D(...) is another Texture2D sample node :)

Simply connect the same Texture Asset to multiple Texture2D nodes, and use different UVs, then sum them up

You *have* to use the Texture2D node multiple times, there's no way of "moving" a sampler other than sampling again at another location. Look at the page you linked:

sum += texture2D(RTScene, vec2(vTexCoord.x - 4.0*blurSize, vTexCoord.y)) * 0.05;

sum += texture2D(RTScene, vec2(vTexCoord.x - 3.0*blurSize, vTexCoord.y)) * 0.09;

sum += texture2D(RTScene, vec2(vTexCoord.x - 2.0*blurSize, vTexCoord.y)) * 0.12;

...

each Texture2D(...) is another Texture2D sample node :)

Simply connect the same Texture Asset to multiple Texture2D nodes, and use different UVs, then sum them up

Yes - that gets you halfway, but I think that Kevin is right, in that in the example on the page, in the pixel shader there's

uniform sampler2D RTBlurH; // this should hold the texture rendered by the horizontal blur passThis "holding" is the thing that's not possible in SF. Resampling raw data, and not an asset. I don't know if it's possible, but it would be good.

It is possible, but if you look at the site, there are two shaders, not one :)

So, you can make both of these shaders, render the first one into a Render Texture, then pass that render texture (RTBlurH) into the second shader.

So, you can make both of these shaders, render the first one into a Render Texture, then pass that render texture (RTBlurH) into the second shader.

Eeep - seems I've hit a gap - how do you "render the first one into a Render Texture?" If I'm blurring the scene, and have a screen space quad, then - okay, I get the use of a render texture, but are you referring to some other magic where I can pipe the shader output back into a render texture (without a unity camera), so that I can use this technique to blur any texture? In a lot of ways this all is academic, as there is some good screen space blurs in camera Effects, and mip will do most of the time, but....

My reading of Graphics.Blit still needs a texture as source, and the result of the first shader (in the example) exists as a data, rather than a texture. Am I wrong?

I feel like an idiot, but I will pursue it till the end - if the arguments for Graphics.Blit (Texture,RenderTexture) expect 2 textures, how can I pass the result of the first shader? If built with shader forge, the thing I want to pass into the blit is the result of 9* sums, and is not a texture at all. It doesn't exist in the right format, and so can't be blitted.

You want this one:

Graphics.Blit(Texture, RenderTexture, Material, int);

1. Get the current screen contents into a texture, probably a render texture, called sceneContent

2. Blit into a RenderTexture, called hBlurredRT, using the horizontal blur material, which is using the horizontal blur shader

3. Blit again, into another texture called finalRT, using a material that has the vertical blur, having passed in the hBlurred RT

RenderTexture sceneContent; // RT with scene content

RenderTexture hBlurredRT; // RT with the scene, horizontally blurred

RenderTexture finalRT; // Final render, draw this to the screen after blurring

Material hBlur; // Horizontal blur material

Material vBlur; // Vertical blur material

Then, when you want to render:

Graphics.Blit(sceneContent, hBlurredRT, hBlur, 0); // Blur sceneContent with hBlur. Store the output in hBlurredRT

Graphics.Blit(hBlurredRT, finalRT, vBlur, 0); // Blur hBlurredRT vertically, using vBlur. Store in finalRT

Note that these assume that your main input texture is internally named _MainTex

Graphics.Blit(Texture, RenderTexture, Material, int);

1. Get the current screen contents into a texture, probably a render texture, called sceneContent

2. Blit into a RenderTexture, called hBlurredRT, using the horizontal blur material, which is using the horizontal blur shader

3. Blit again, into another texture called finalRT, using a material that has the vertical blur, having passed in the hBlurred RT

RenderTexture sceneContent; // RT with scene content

RenderTexture hBlurredRT; // RT with the scene, horizontally blurred

RenderTexture finalRT; // Final render, draw this to the screen after blurring

Material hBlur; // Horizontal blur material

Material vBlur; // Vertical blur material

Then, when you want to render:

Graphics.Blit(sceneContent, hBlurredRT, hBlur, 0); // Blur sceneContent with hBlur. Store the output in hBlurredRT

Graphics.Blit(hBlurredRT, finalRT, vBlur, 0); // Blur hBlurredRT vertically, using vBlur. Store in finalRT

Note that these assume that your main input texture is internally named _MainTex

Thanks for this, and the trouble you've taken. I can see how this would work in the case of using the screen contents as a render texture that blitting is no problem. My question was about the capacity to blur a texture, not the scene. It looks like it can't be done.

You can do that too. Just pass in a regular texture instead of the scene content, and you have the same result. It's a bit trickier than modifying regular textures though, but, it should work. That said, it would be a bit easier with multi-pass shaders, which isn't possible in SF at the moment.

Here's a graph, as illustration. It's the first shader from the 2part shader that the original poster (Kevin) linked to. The result of this shader is an add node. It works, it's lovely.

The second shader is where my question comes in. I know that you can make a screen-size quad in another camera, assign this shader's material, then pass this as a rendertexture to the 2nd shader, which does the vertical blur.

My question is "can I pass the add result of the first shader into Graphics.Blit?" I know I can pass a regular texture, but that is always going to be unmodified.

The reason I'm spending so long on this (and thanks for your patience) is that I want to know if there is any way to pass the regular node data (in this case, the add node) to Graphics.Blit, but that only accepts textures. If I could, then that would obviously be great for post effects.

The second shader is where my question comes in. I know that you can make a screen-size quad in another camera, assign this shader's material, then pass this as a rendertexture to the 2nd shader, which does the vertical blur.

My question is "can I pass the add result of the first shader into Graphics.Blit?" I know I can pass a regular texture, but that is always going to be unmodified.

The reason I'm spending so long on this (and thanks for your patience) is that I want to know if there is any way to pass the regular node data (in this case, the add node) to Graphics.Blit, but that only accepts textures. If I could, then that would obviously be great for post effects.

Servicio de atención al cliente por UserEcho

You *have* to use the Texture2D node multiple times, there's no way of "moving" a sampler other than sampling again at another location. Look at the page you linked:

sum += texture2D(RTScene, vec2(vTexCoord.x - 4.0*blurSize, vTexCoord.y)) * 0.05;

sum += texture2D(RTScene, vec2(vTexCoord.x - 3.0*blurSize, vTexCoord.y)) * 0.09;

sum += texture2D(RTScene, vec2(vTexCoord.x - 2.0*blurSize, vTexCoord.y)) * 0.12;

...

each Texture2D(...) is another Texture2D sample node :)

Simply connect the same Texture Asset to multiple Texture2D nodes, and use different UVs, then sum them up