0

Completed

Matrix Support for Multiply?

I'd like to be able to do my own rolled Matrix multiplies (for instance, to be able to decode scale lightmaps properly). To do that I need to be able to multiply my normal by the unity_DirBasis which is a 3x3.

Another option is just to support Lightmap decode options as nodes.

Another option is just to support Lightmap decode options as nodes.

Answer

0

Answer

Completed

Freya Holmér (Developer) 10 years ago

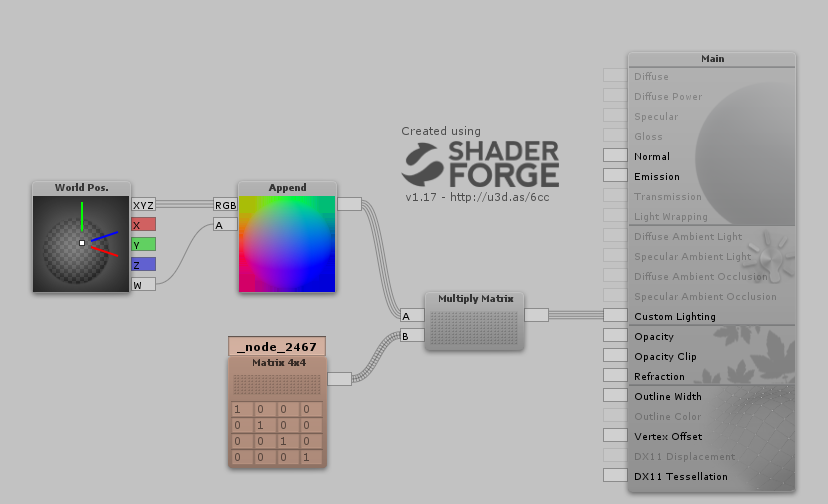

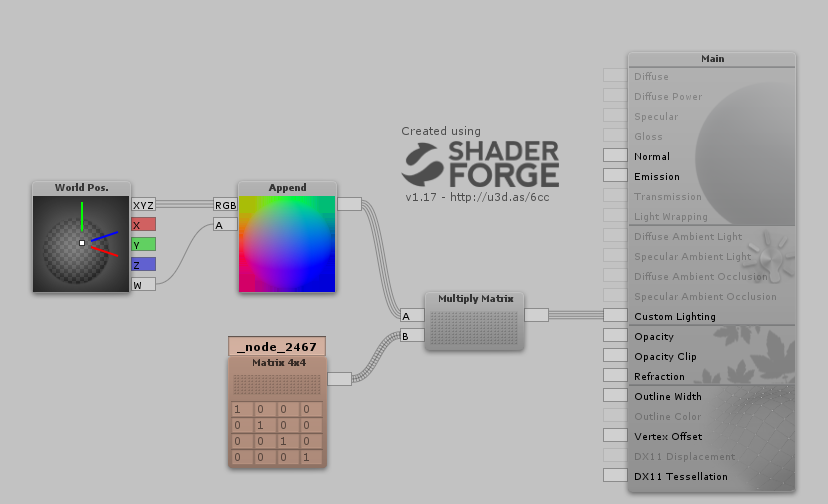

This has now been implemented in 1.17 :)

This may come at some point, but it's not of high priority at the moment! It would be nice to have the matrix data types :)

Matrix multiplication is required for shaders which make use of Shader.SetGlobalMatrix - in particular, this is needed to light particles correctly so it would be great to have this in ShaderForge.

Found a bug in the matrix multiply node. Create this shader graph:

Close it, then reopen it. The A input of the multiply matrix node gets disconnected and there's an 'invalid input' error in the console.

Close it, then reopen it. The A input of the multiply matrix node gets disconnected and there's an 'invalid input' error in the console.

I just put together a shader with the Multiply Matrix node and came across this same bug. Close the shader, re-open and the A input of the Append node is disconnected!

actually seems like more often its the A input of the Multiply Matrix node >_<

+2

Also, could the matrix multiply node please accept a Vector3 input? Right now we have to append a 0 at the end of every Vector3 we want to multiply by the matrix :)

Customer support service by UserEcho