Your comments

Edit - thanks for your indulgence. I just figured all this out.

10 years ago

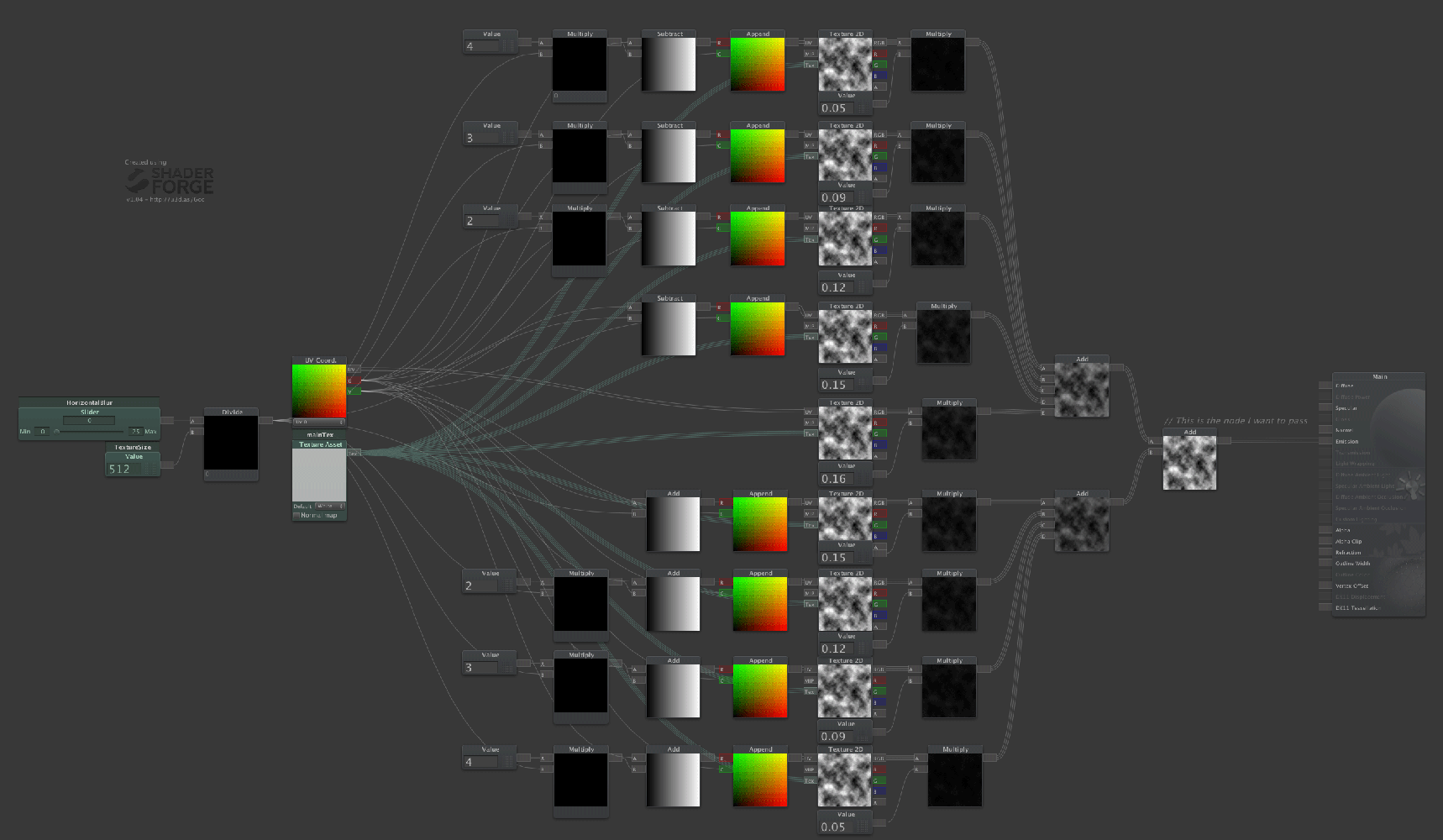

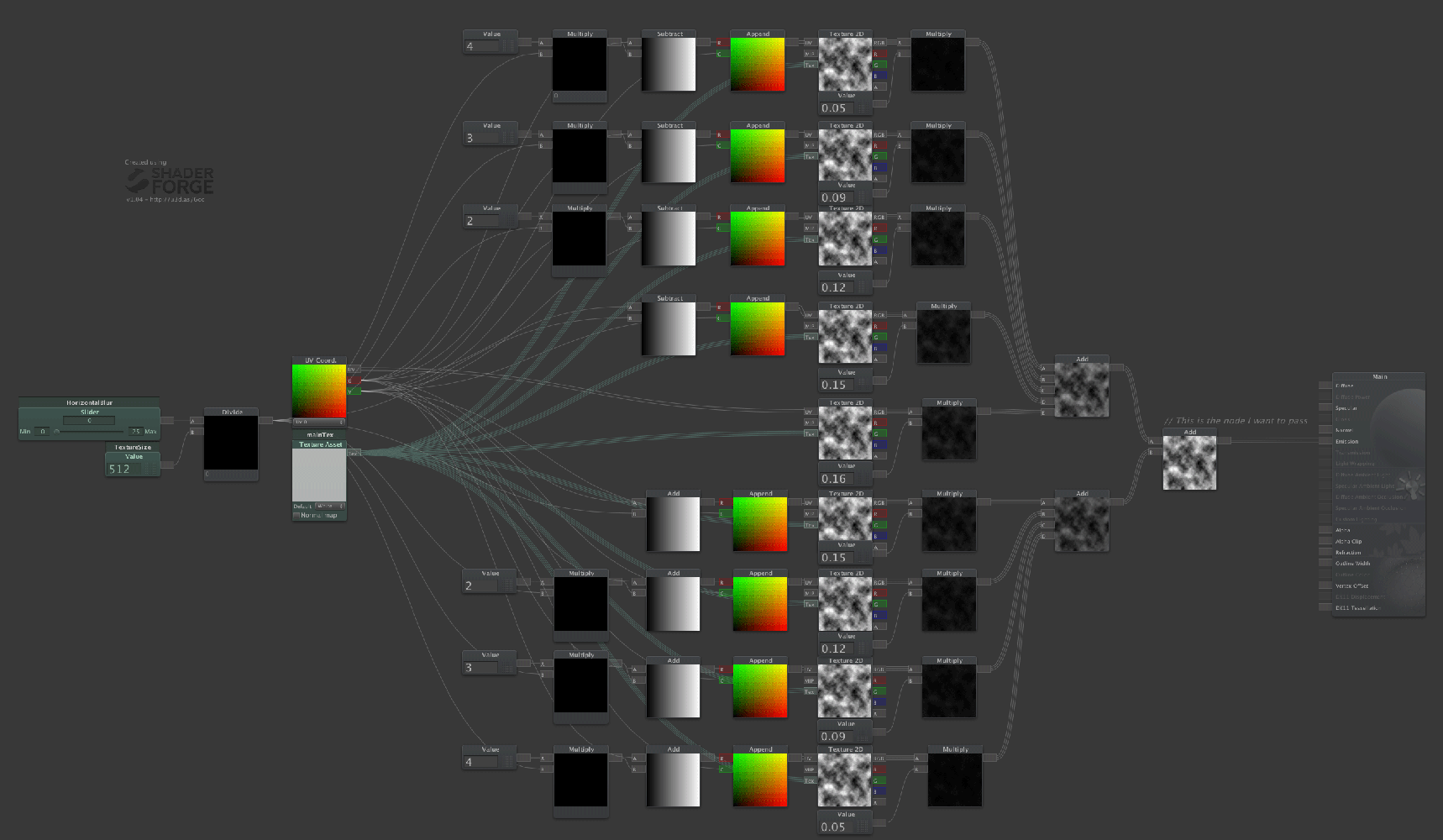

Here's a graph, as illustration. It's the first shader from the 2part shader that the original poster (Kevin) linked to. The result of this shader is an add node. It works, it's lovely.

The second shader is where my question comes in. I know that you can make a screen-size quad in another camera, assign this shader's material, then pass this as a rendertexture to the 2nd shader, which does the vertical blur.

My question is "can I pass the add result of the first shader into Graphics.Blit?" I know I can pass a regular texture, but that is always going to be unmodified.

The reason I'm spending so long on this (and thanks for your patience) is that I want to know if there is any way to pass the regular node data (in this case, the add node) to Graphics.Blit, but that only accepts textures. If I could, then that would obviously be great for post effects.

The second shader is where my question comes in. I know that you can make a screen-size quad in another camera, assign this shader's material, then pass this as a rendertexture to the 2nd shader, which does the vertical blur.

My question is "can I pass the add result of the first shader into Graphics.Blit?" I know I can pass a regular texture, but that is always going to be unmodified.

The reason I'm spending so long on this (and thanks for your patience) is that I want to know if there is any way to pass the regular node data (in this case, the add node) to Graphics.Blit, but that only accepts textures. If I could, then that would obviously be great for post effects.

Thanks for this, and the trouble you've taken. I can see how this would work in the case of using the screen contents as a render texture that blitting is no problem. My question was about the capacity to blur a texture, not the scene. It looks like it can't be done.

I feel like an idiot, but I will pursue it till the end - if the arguments for Graphics.Blit (Texture,RenderTexture) expect 2 textures, how can I pass the result of the first shader? If built with shader forge, the thing I want to pass into the blit is the result of 9* sums, and is not a texture at all. It doesn't exist in the right format, and so can't be blitted.

My reading of Graphics.Blit still needs a texture as source, and the result of the first shader (in the example) exists as a data, rather than a texture. Am I wrong?

Eeep - seems I've hit a gap - how do you "render the first one into a Render Texture?" If I'm blurring the scene, and have a screen space quad, then - okay, I get the use of a render texture, but are you referring to some other magic where I can pipe the shader output back into a render texture (without a unity camera), so that I can use this technique to blur any texture? In a lot of ways this all is academic, as there is some good screen space blurs in camera Effects, and mip will do most of the time, but....

Yes - that gets you halfway, but I think that Kevin is right, in that in the example on the page, in the pixel shader there's

uniform sampler2D RTBlurH; // this should hold the texture rendered by the horizontal blur passThis "holding" is the thing that's not possible in SF. Resampling raw data, and not an asset. I don't know if it's possible, but it would be good.

super cool.

That's what I have been doing, and that's what's been happening. Thanks for your great work!

For :

https://docs.unity3d.com/Documentation/ScriptRefer...

Or, full-screen effects where I want to use the depth buffer (and normals? hint, hint?), for example, in combination with screen space uv's.

C

https://docs.unity3d.com/Documentation/ScriptRefer...

Or, full-screen effects where I want to use the depth buffer (and normals? hint, hint?), for example, in combination with screen space uv's.

C

Customer support service by UserEcho